The page begins with more commentary on the design and functioning of spectrometers, both those that split emitted radiation from high energy excitation and those that measure reflectances. Dispersion is accomplished by prisms or fine-line gratings. The advent of Charge-Coupled Devises and the Pushbroom technique for retrieving the detector charges to create signals are discussed, to establish a basis for building AVIRIS. A diagram relates the resulting signal sequence to a sampled pixel; a second shows in an image cube format the final reconstructed image and variations in signal strengths along sample rows and columns. Several other hyperspectral instruments are mentioned.

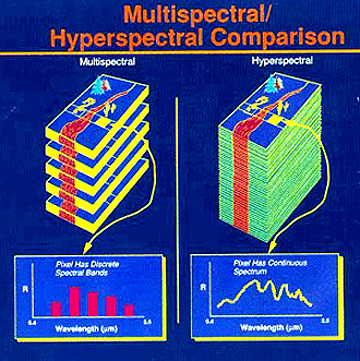

The advantage of a spectrometer operating in space over a multispectral scanner, which samples only bands of extended but usually separated spectral intervals, is, as hinted at before, that it samples essentially the full continuum of wavelengths (actually, with present technology, very narrow individual bands that are wavelength contiguous). This illustration helps to clarify the point just stated:

The vital component of any spectrometer is its spectral dispersing device. These instruments have a physical means of spreading radiation composed of differing photon energies (thus a range of frequencies or their inverse, wavelengths) laterally onto a stretched linear display.

The simplest device is an optical glass prism whose cross-section is a triangle. A polychromatic light (non-visible radiation) beam arrives at one side of the prism. At this glass surface, the rays with different wavelengths bend according to their response to the refractive power of the glass. The degree of bending varies with wavelength, which means that the index of refraction also differs for the range of wavelengths. For example, in crown glass the index is 1.528 for violet and 1.514 for red. If the beam strikes one side of a right-triangle prism at a right angle, it continues in the same direction but slows and then bends to differing extents as it emerges at the slant (hypotenuse) surface on the opposite side. If we orient the prism with the hypotenuse face as its base (parallel to the incoming light beam), the beam will meet a slanted face first and bend as it enters the prism. Then the dispersed light will meet the second slant face and bend more. The net effect is to spread the (visible) light rays according to their effective indices of refraction into a continuous geometric color pattern that forms the wavelength abscissa of a spectral plot (see pages I-2 and I-4).

A diffraction grating disperses light according to a different mechanism. The grating can be metal or glass, on which are ruled (cut) fine grooves of straight, parallel lines that are extremely close-spaced (e.g., 15,000 per inch), so that the spacing between pairs is roughly equivalent to the wavelengths in the Visible-NearIR region. Each line, analogous to a slit, causes polychromatic light to diffract (bend) at angles that depend on wavelength. For the array of closely-spaced lines (each pair separated by a distance, d), we apply the Diffraction equation: n λ = d sinΘ , where n refers to simple integers (1,2,3...) that establish orders (overtones), λ is wavelength, and Θ (Theta) is the diffraction or bending angle for that set of conditions. Thus, a light ray striking the grating undergoes spreading at various angles, according to the wavelengths contained within it. Some wavelengths have larger numbers of photons (more intense) than others, so that we can recast a plot of reflected light energies as reflectance versus wavelength, hence yielding the type of spectral curves we have examined in the previous two pages.

An emission spectrometer, used to analyze material compositions, takes light of discrete wavelengths, representing excitation states of different chemical elements, through a slit and then a prism or diffraction grating onto a recording medium, such as a photographic plate. The wavelength-dependent bending reproduces a series of lines (repeated images of the slit) at varied spacings, whose wavelengths, we can measure, and thus identify the particular elements in the sample. The sample is usually heated in a flame or electric arc to force electrons into higher energy states, because light of given wavelengths is emitted according to the quantized energy when the electrons transition to lower states. Remember Planck's equation E = hf, where f is the frequency, discussed in the tutorial Introduction.

A stationary reflectance spectrometer, looking through a collimating lens at a ground scene breaks the light into wavelengths emanating from the fixed view. A problem with recording the light arises because the scene moves past the lens (or the spectrometer swings across the scene) at high speed. Older types of detectors didn't have enough time to record the ever-changing field of view, that is, the electronics couldn't sample the dispersed light fast enough to resolve it into the closely-spaced wavelengths needed to construct a spectral curve. Instead, they recorded the light as broad bands, in the manner of Landsat-type scanners.

The technology for a scanning spectrometer that could sweep across moving terrain, while sampling at narrow wavelength intervals, had to await a breakthrough. This breakthrough came with the advent of Charge-Coupled Detectors (CCD's). A CCD is a microelectronic semi-conducting metal chip that detects light. Radiation produces an electron charge on the chip in proportion to the number of photons received, which is governed by the intensity and exposure time. The charge must be rapidly removable, resetting the CCD for the next influx of photons, such as those coming from the next part of the scene..

A chip is extremely small (tens of micrometers). It is made of some light-sensitive material, such as the silicon used in computer microchips. Normally, we mount hundreds of them on an insulator backing, in a line or a two-dimensional array. Consider a linear array of, say, 2,000 chips per inch. If we allow light to enter through lenses and strike the array simultaneously from one end to the other, then each chip will receive light from a small part of the scene. Adjacent chips will get their light from adjacent ground or air locations. Thus, the instrument samples a line of finite width and length on the ground or in the air.

Each chip, accruing a charge representative of the arriving photon batch, is a pixel that defines a spatial resolution, which depends on the chip size and the height (distance) of the chip array above (from) the scanned scene. After an instant of dwell time over the scene, all of the chips discharge sequentially (producing a signal stream for a recording device) to reset for the next batch. The chips have a high signal-to-noise (S/N) ratio, which allows, in the brief moment, enough buildup of electrons, coupled with gain amplification, to yield usable signals. After a single exposure, and the spacecraft moves on a small distance, the process repeats for the next line of the ground scene. The advance of the linear array, similar to the forward motion of a wide "pushbroom", generates a succession of varying electronic signals that the instrument converts into an image, in which the range of gray levels relate to the signal strengths.

A variant of the linear array is the two-dimensional array, which receives and records light from rectangular ground scenes on multiple lines of chips. Or, another mode uses a rocking or rotating mirror to sweep across the array.

A sensor that uses CCD elements can be multispectral if it uses several arrays, each dedicated to a wavelength band, for which a bandpassing filter determines the bandwidth. SPOT's sensor uses CCD arrays and red, green, and infrared filters to create its multispectral data set.

But, these broad-band sensors do not provide hyperspectral data, that is, they do not sample the spectrum in narrow intervals. To accomplish this, the sensor detector must consist of many parallel rows of chips, each dedicated to a narrow wavelength interval, that the recorder can sample (CCDs discharged) extremely rapidly. Imagine a two-dimensional array that is several hundred chips wide and 200 or so long. Let the light enter the sensor through a telescope or focusing lens, impinge upon a moving-mirrored surface, and then pass through a diffraction grating, which disperses the light over a spectral range in the direction of the array length (which is also the same direction of forward motion of the sensor). At one instantaneous position of the mirror, the light from the ground activates the first pixel chip in the array width-wise, and at the same time, does so for the other wavelengths along the the array length (the spectral dimension). The recorder electronically samples lengthwise the first chip in each line. Next the mirror moves widthwise to the next ground spot and the light from it spreads its spectral dispersion lengthwise. The mirror continues progressively to complete the equivalent of one ground sweep. While this happens, the sensor moves on to look at the next ground position and the whole scanning-dispersing process repeats. As the instrument proceeds along its flight path or orbit, the final result is a vast collection of data that has both spatial and hyperspectral inputs. From the data set, we can construct images using individual narrow spectral bands associated with small plots on the ground. Or, we can tie spectral data for any pixel position across the width to the wavelengths sampled lengthwise, to plot a spectral curve for that piece of the surface. With special modifications, we can image the atmosphere, if that is the target.

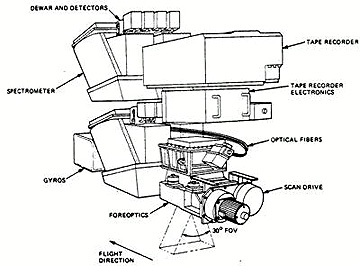

This, in a general way, describes how AVIRIS and other hyperspectral imaging spectrometers operate. JPL's AVIRIS uses diffraction gratings with two sets of CCD arrays, one with silicon chips to sense in the visible range and the other with Indium-Antimony (InSb) chips for wavelengths in the Near-IR to Short-Wave-IR range. A refrigeration unit cools the detectors with liquid nitrogen for optimum performance. There are 224 detectors (channels) in the spectral dimension, extending over a range of 0.38 to 2.50 µm. This arrangement leads to a spectral resolution for each chip of 0.01 µm. By choice, the units that the remote sensing industry adopted for reporting hyperspectral data is the nanometer (nm); 1000 nm = 1 µm. The resolution stated this way is 10 nm, and the range of coverage is 380 to 2500 nm (0.38 - 2.5 µm). AVIRIS gathers its light through a 30° field of view, sending diffracted light to the 614 individual CCD's in the width (across flight) direction. An oscillating mirror scans the scene and sends the incoming radiation to sweep across the array. The spatial resolution derived from this depends on the platform height. A typical mission, mounting AVIRIS on a NASA aircraft (ER-2), produces a spatial resolution of about 20 meters, but we can improve that to five meters by flying at lower altitudes, which, of course, narrows the width of the ground coverage.

This is a picture of AVIRIS:

And this is an example of an AVIRIS spectral curve for a single pixel:

The stacking under the pixel at top is meant to denote the multiple 224 10 nm channels, whose values we plotted to attain this curve.

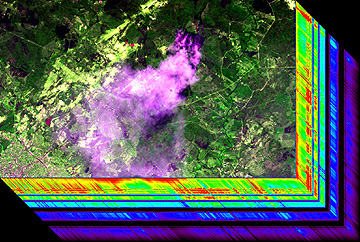

Another way to visualize the relationship between an image developed in the usual color compositing manner, but using just three 10 nm data values at different wavelengths, and the spectral variations over the interval sampled is to depict this as a Hyperspectral Cube:

Here, the top of the cube is a quasi-natural color image made from the reflectances associated with three narrow spectral channels in the visible region. On the left and right front sides are color representations of the spectra for each of the pixels, located along the lines joining the top image with the spectral dimensions. The top corresponds to the low end of the spectrum and the bottom, the high end. Black through purple and blue are assigned to low reflectances. Yellow through red and then white denote high reflectances. AVIRIS imaged the area shown during an aircraft flight over the southern part of San Francisco Bay. Landing strips at Moffett Field next to NASA's Ames Research Center locate that part of the image within Mountain View, Calif.

Since AVIRIS, many other imaging spectrometers have been constructed and put online. You can choose a list of most of these, in tabular form.

One of these is HYDICE, developed by the Navy, for aerial use. It has 210 channels, each with a spectral resolution of about 10 nm, extending from 413 to 2,504 nm. It uses a prism as the spectral dispersion device. The spatial dimension is defined by a row of 320 pixels. When flown at low altitudes, HYDICE yields images with resolutions approaching one meter.

Another instrument, developed in Europe, is DAIS, the Digital Airborne Imaging Spectrometer, which uses a diffraction grating to obtain spectral coverage between 450 and 2,500 nm. Its 72 channels collect radiation in three spectral intervals: 400-1200 nm (bandwidth 15-30 nm); 1,500-1,800 nm (bandwidth 45 nm); 2,000-2,500 nm (bandwidth 20 nm). The gaps coincide with atmospheric absorption bands. As separate sensors using the same optics, it also has a single broad-band channel between 3,000-5,000 nm and six channels that operate within parts of the 8,000-14,000 nm interval. These bands provide important information on thermal emissions from ground objects. Flown low, it provides one-meter resolution imagery for strips a few kilometers wide.

For most systems, the diffraction grating or the prism accomplishes the dispersion, but other techniques of separating the spectrum include interference filters, acoustical-optical filters, liquid crystal tunable filters, Michelson interferometers, Fourier Transform interferometers, and multi-order etalons. Chips made of mercury-cadmium-tellurium (MCT) or platinum silicide are sensitive to certain usable wavelength intervals.

Primary Author: Nicholas M. Short, Sr. email: nmshort@epix.net